Assistant Professor

Director: Spatial Intelligence Research Group (SINRG)

Institute for the Wireless Internet of Things

Department of Electrical and Computer Engineering

Northeastern University

Office: 650D EXP

Address: 360 Huntington Ave, Boston, MA 02115

Email: m.dasari@northeastern.edu

Research Interests: Spatial Intelligence, AR/VR Systems, Digital Twins, Computer Networks, Wireless Sensing and Communications, Mobile and Wearable Computing

TVMC: Time-Varying Mesh Compression Using Volume-Tracked Reference Meshes

ACM MMSys 2025 (Conference on Multimedia Systems)

Paper

Code

🏆 Best Reproducible Paper Award

RoboTwin: Remote Human-Robot Collaboration in XR

ACM HotMobile 2025 (Conference on Mobile Computing Systems and Applications)

Paper

Code

Video

🏆 Best Demo Award

Fumos: Neural Compression and Progressive Refinement for Continuous Point Cloud Video Streaming

IEEE VR 2024 (Conference on Virtual Reality and 3D User Interfaces) - selected to appear in IEEE Transactions on Visualization and Computer Graphics (TVCG)

Paper

Video

StageAR: Markerless Mobile Phone Localization for AR in Live Events

IEEE VR 2024 (Conference on Virtual Reality and 3D User Interfaces)

Paper

Teaser

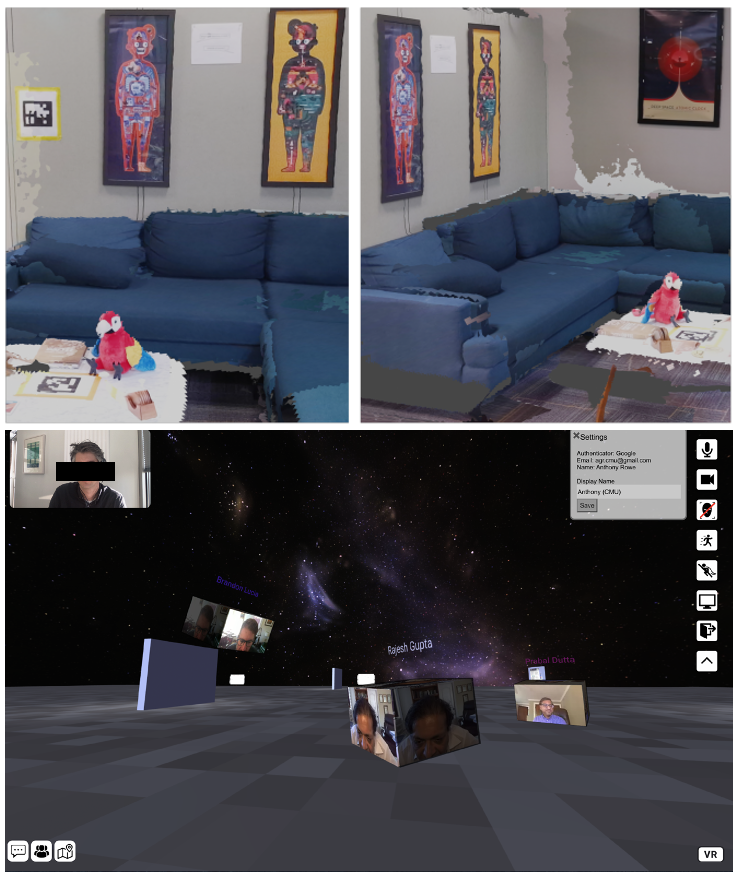

MeshReduce: Scalable and Bandwidth Efficient Scene Capture for 3D Telepresence

IEEE VR 2024 (Conference on Virtual Reality and 3D User Interfaces)

Paper

Teaser

Scaling VR Video Conferencing

IEEE VR 2023 (Conference on Virtual Reality and 3D User Interfaces)

Paper

Slides

Code

Teaser

RenderFusion: Balancing Local and Remote Rendering for Interactive 3D Scenes

IEEE ISMAR 2023 (Conference on Augmented and Mixed Reality)

Paper

Slides

Code

Teaser

RoVaR: Robust Multi-agent Tracking through Dual-layer Diversity in Visual and RF Sensing

ACM IMWUT/UbiComp 2023 (Conference on Interactive, Mobile, Wearable and Ubiquitous Technologies)

Paper

Data

AR Game

Hyper-local Conversational Agents for Serving Spatio-temporal Events of a Neighbourhood

ACM IMWUT/UbiComp 2022 (Conference on Interactive, Mobile, Wearable and Ubiquitous Technologies)

Paper

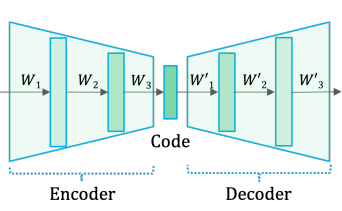

Swift: Adaptive Video Streaming with Layered Neural Codecs

USENIX NSDI 2022 (Conference on Networked Systems Design and Implementation)

Paper

Slides

Code

Video

Cyclops: An FSO-based Wireless Link for VR Headsets

ACM SIGCOMM 2022 (Conference on Data Communications)

Paper

PARSEC: Streaming 360-Degree Videos Using Super-Resolution

IEEE INFOCOM 2020 (Conference on Computer Communications)

Paper

Slides

Code

Video

Impact of Device Performance on Mobile Internet QoE

ACM IMC 2018 (Conference on Internet Measurements)

Paper

Slides

Data

Video

A full list of papers can be found here!

The Internet has seen a remarkable change in long distance communication in terms of voice and video calls in just three decades. However, despite the past advances, today's applications (e.g., Zoom/FaceTime) still lack the essential subtleties of ``Telepresence'' i.e., everyday face-to-face co-located communication with realistic eye contact, body language, and physical presence in a virtual space. While the concept has been around for decades, only recent advances in high performance graphics hardware, better depth sensing technology, and faster software pipelines have made it possible to consider practical real-time 3D telepresence systems. This project investigates several research questions— 1) How to capture and digitize a 3D scene with low latency and practical bitrates to stream on the Internet in real-time? 2) Can the traditional 2D content distribution strategies work well for 3D streaming? 3) How to render high quality 3D content on constrained AR/VR headsets? 4) What kind of 3D applications can we envision to bring the everyday serendipity virtually?

Publications: IEEE VR 2024, IEEE VR 2023, IEEE ISMAR 2023, ACM SIGCOMM 2022

Video compression plays a central role for Internet video applications in reducing the network bandwidth requirement. Traditional algorithm-driven compression methods have served well to realize today's Internet video applications with an acceptable user experience. However, emerging 4K/8K/360-Degree video streaming, and AR/VR applications require orders of magnitude more bandwidth than today's applications. The monolithic, application-unware nature of the current generation compression algorithms is not scalable to realize such nearfuture applications over the Internet. This project explores data-driven techniques to significantly change the landscape of the source compression algorithms and improve the experience of next-generation video applications.

Publications: IEEE VR 2024, USENIX NSDI 2022, IEEE INFOCOM 2020

The interactive and immersive applications such as Augmented Reality (AR) and Virtual Reality (VR) have significant potential for various tasks like industrial training, collaborative robotics, remote operation, etc. A key challenge to deliver these applications is to provide accurate and robust tracking of multiple agents (humans and robots) involved in every-day, challenging environments. Current AR/VR solutions rely on visual tracking algorithms (e.g., SLAM/Odometry) that are highly sensitive to environment (e.g., lighting conditions). This project explores augmenting the RF-positioning (e.g., WiFi/UWB) to improve the tracking in terms of accuracy (< 1cm level), robustness (with diverse environmental conditions), and scalability across multiple agents. The key challenges here are how to leverage two completely different modalities to complement with each other with little or no infrastructure support.

Publications: IEEE VR 2024, ACM IMWUT/UbiComp 2023

This is an interdisciplinary course covering the following topics from emerging immersive media, computer networks, vision and graphics. In addition to the regular lectures, the class will also have experiential sessions with a vareity of state-of-the-art XR headsets in the market.

- Fundamental problems of networked applications

- XR fundamentals, headsets, glasses, wearables

- XR content representations

- 2D, Flat 360, 3D/Volumetric videos (RGB-D, point cloud, mesh, NeRF)

- Monocular, stereoscopic, and multiview videos

- Acquiring XR content for network delivery

- Compression algorithms for RGB, depth videos, point clouds, mesh sequences

- Multiview compression algorithms

- Streaming fundamentals

- Stored, live, and interactive streaming protocols

- Streaming XR content (videos, point clouds, meshes, holograms, spaces)

- Local streaming via WiFi, mmWave and optical wireless links

- Remote and hybrid rendering

- Visual and wireless sensing for person tracking

- Networked XR platforms such as ARKit/Core, Unity, Open3D

- Building XR systems such as 3D telepresence (VR), Spatial Web (AR)

- Tracking fundamentals: Eyes, Hands, Face, Head, Body, etc; Outside-in, Inside-out.

EECE 5698: Networked XR Systems, Spring 2024, Northeastern University

This class is about fundamental principles of wireless and mobile networking. Some of the topics that we will cover are the following:

- Wireless Signals, Properties, Protocols

- Wireless Physical Layer

- Spectrum Sharing

- RF-based Localization

- Wireless Link Layer

- Mobile IP

- Wireless Transport

- Mobile and Wireles Applications

- Mobile Web

- Mobile Video

- RF Sensing

- Mobile Devices, Performance and Energy Management

- Deep Learning in Mobile and Wireless Applications

2025

- ACM Transactions on Sensor Networks - Special Issue on Immersive Computing, Guest Editor

- USENIX NSDI, Program Committee

- IEEE VR, Program Committee

- ACM MMSys, Program Committee

2024

- ACM Transactions on Multimedia Systems, Invited Reviewer

- USENIX ATC, Program Committee

- IEEE COMSNETS, Program Committee

- IEEE ICDCS, Program Committee

- ACM IMC, Program Committee

- ACM CoNEXT, Program Committee

- ACM MM, Program Committee

- ACM MMSys, Program Committee

2023

- ACM SIGCOMM Workshop on Emerging Multimedia Systems, Program Co-Chair

- ACM IMC, Program Committee

- ACM CoNEXT, Program Committee

- ACM MM, Program Committee

- ACM MMSys, Program Committee

2022

- ACM SIGCOMM Artifact Evaluation Committee (AEC)

- ACM IMC, Program Committee

- ACM MM, Program Committee

- ACM MMSys, Program Committee

2021

- ACM Student Workshop at MobiSys, Program Co-Chair

- ACM IMWUT/UbiComp Reviewer

- ACM MM, Program Committee

2020 & Before

- IEEE Pervasive Computing, Reviewer

- ACM SIGCOMM, Artifact Evaluation Committee (AEC)

- ACM S3 Workshop at MobiCom, Program Co-Chair

- ACM Tansactions on Sensor Networks, Reviewer

My research has been generously supported by the following institutions!

In the past, I have worked with and mentored by the following rockstars in my field.

- Postdoc mentors: Anthony Rowe, Srinivasan Seshan

- PhD mentors: Samir Das, Aruna Balasubramanian, Dimitris Samaras, Himanshu Gupta, Mike Ferdman

- Industry research internship mentors: Karthik Sundaresan, Vijay Gopalakrisnan, Fahim Kawsar, Kyu-Han Kim